Naacl 2022 On Transferability Of Prompt Tuning For Natural Language

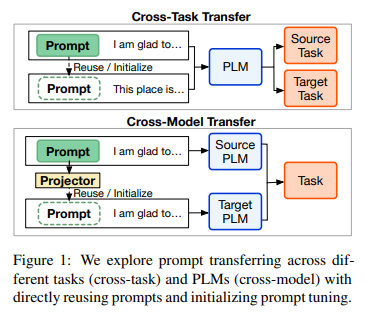

On Transferability Of Prompt Tuning For Natural Language Processing @inproceedings{su etal 2022 transferability, title = "on transferability of prompt tuning for natural language processing", author = "su, yusheng and wang, xiaozhi and qin, yujia and chan, chi min and lin, yankai and wang, huadong and wen, kaiyue and liu, zhiyuan and li, peng and li, juanzi and hou, lei and sun, maosong and zhou, jie", editor = "carpuat, marine and de marneffe, marie catherine. Prompt tuning (pt) is a promising parameter efficient method to utilize extremely large pre trained language models (plms), which can achieve comparable performance to full parameter fine tuning by only tuning a few soft prompts. however, pt requires much more training time than fine tuning. intuitively, knowledge transfer can help to improve the efficiency. to explore whether we can improve.

On Transferability Of Prompt Tuning For Natural Language Processing The overall results are shown in table46. we can see that: (1) the overlapping rate of activated neurons (on ) metric works better than all the embedding similarities, which suggests that model stimulation is more im portant for prompt transferability than embedding distances. (2) on works much worse on t5xxl. This is the source code of "on transferability of prompt tuning for natural language processing", an naacl 2022 paper . overview prompt tuning (pt) is a promising parameter efficient method to utilize extremely large pre trained language models (plms), which can achieve comparable performance to full parameter fine tuning by only tuning a few. It is found that the overlapping rate of activated neurons strongly reflects the transferability, which suggests how the prompts stimulate plms is essential, and that prompt transfer is promising for improving pt. prompt tuning (pt) is a promising parameter efficient method to utilize extremely large pre trained language models (plms), which can achieve comparable performance to full parameter. Request pdf | on jan 1, 2022, yusheng su and others published on transferability of prompt tuning for natural language processing | find, read and cite all the research you need on researchgate.

Comments are closed.