Gpudirect Rdma On Nvidia Jetson Agx Xavier Nvidia Technical Blog

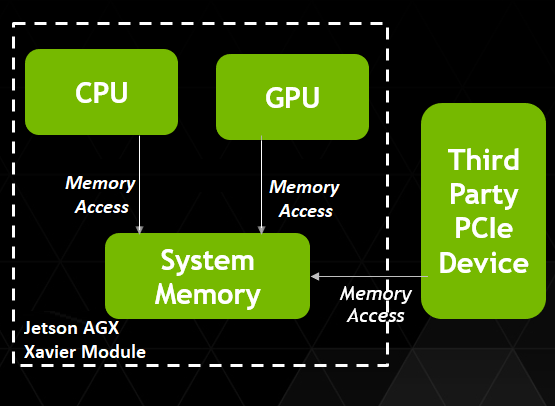

Gpudirect Rdma On Nvidia Jetson Agx Xavier Nvidia Technical Blog T. f. r. e. remote direct memory access (rdma) allows computers to exchange data in memory without the involvement of a cpu. the benefits include low latency and high bandwidth data exchange. gpudirect rdma extends the same philosophy to the gpu and the connected peripherals in jetson agx xavier. gpudirect rdma enables a direct path for data. Remote direct memory access (rdma) allows computers to exchange data in memory without the involvement of a cpu. the benefits include low latency and high bandwidth data exchange. gpudirect rdma extends the same philosophy to the gpu and the connected peripherals in jetson agx xavier. gpudirect rdma enables a direct path for data exchange.

Gpudirect Rdma On Nvidia Jetson Agx Xavier Nvidia Technical Blog Gpudirect rdma is supported on jetson agx xavier platform starting from cuda 10.1 and on drive agx xavier linux based platforms from cuda 11.2. refer to porting to tegra for details. on arm64, the necessary peer to peer functionality depends on both the hardware and the software of the particular platform. Inspired by this blog: nvidia technical blog – 11 jun 19 gpudirect rdma on nvidia jetson agx xavier | nvidia technical blog. remote direct memory access (rdma) allows computers to exchange data in memory without the involvement of a cpu. the benefits include low latency and high bandwidth data exchange. The cumemalloc function call must be replaced by cumemallochost cumemhostgetdevicepointer function call, according to the official guide of porting gpu direct rdma code to jetson. i’m not sure if agx kernel 4.9.140 tegra supports ofed gpudirect rdma but i followed this link anyway mellanox ofed gpudirect rdma i’m able to successfully. Gpudirect rdma on nvidia jetson agx xavier. remote direct memory access (rdma) allows computers to exchange data in memory without the involvement of a cpu. the benefits include low latency and high 8 min read.

Gpudirect Rdma On Nvidia Jetson Agx Xavier Nvidia Technical Blog The cumemalloc function call must be replaced by cumemallochost cumemhostgetdevicepointer function call, according to the official guide of porting gpu direct rdma code to jetson. i’m not sure if agx kernel 4.9.140 tegra supports ofed gpudirect rdma but i followed this link anyway mellanox ofed gpudirect rdma i’m able to successfully. Gpudirect rdma on nvidia jetson agx xavier. remote direct memory access (rdma) allows computers to exchange data in memory without the involvement of a cpu. the benefits include low latency and high 8 min read. If the pointer provided to the communication library is a host memory pointer, what role does the nvidia kernel driver play (if any) ? actually, i observed data transfer to the buffer allocated with cumemallochost even when i disabled the nv peer mem driver. its make me wonder about the difference between gpudirect rdma and regular dma in the. This repository provides a minimal hardware based demonstration of gpudirect rdma. this feature allows a pcie device to directly access cuda memory, thus allowing zero copy sharing of data between cuda and a pcie device. the code supports: nvidia jetson agx xavier (jetson) running linux for tegra (l4t). nvidia drive agx xavier running embedded.

Gpudirect Rdma On Nvidia Jetson Agx Xavier Nvidia Technical Blog If the pointer provided to the communication library is a host memory pointer, what role does the nvidia kernel driver play (if any) ? actually, i observed data transfer to the buffer allocated with cumemallochost even when i disabled the nv peer mem driver. its make me wonder about the difference between gpudirect rdma and regular dma in the. This repository provides a minimal hardware based demonstration of gpudirect rdma. this feature allows a pcie device to directly access cuda memory, thus allowing zero copy sharing of data between cuda and a pcie device. the code supports: nvidia jetson agx xavier (jetson) running linux for tegra (l4t). nvidia drive agx xavier running embedded.

Comments are closed.